You break my Heart (Heartbleed for Dummies)

A great catch

On April, 5 2014, a major security issue has been fixed in OpenSSL. For those who are non-geek, OpenSSL is a software library (code that anybody can reuse) released under a free-software license that aims at handling security issues. This is a free-software, as in free speech: the source code is available on-line and anybody can contribute and add its own corrections/fixes. So, you have the freedom to do whatever you want with it (according to the license terms). But OpenSSL is also free as in free beer: you get a great piece of software without paying anything. But this nice present come without any guarantee and if you are reusing it, it is your duty to check that it satisfy your quality criteria. Who's using OpenSSL? More or less everybody because the software is used by web servers and web browsers and, as we used today mostly web-based application, you are probably using it.

Can you explain this bug?

One of the best effort to explain it is the xkcd webcomic. To make it simple: when your computer is talking to the server, it sometimes keeps the connection alive and established so that you do not have to re-initialize a new connection every time you want to exchange new information. For that purpose, your program (web browser, application, etc.) sends a request to make sure that the server is there and asks it to reply a specific message. When replying, the server includes the requested message plus other information from the server memory. The bug is that the server should just reply the specific message and not send any additional information.

Problem is: this additional piece of information is totally random and might contain useful and/or critical data. In fact, this additional data can be any data the server might access (password, web content, etc.). Some argued that only non-critical data have been exposed but a challenge showed that even private keys (the one you are not supposed to exchange when using cryptography mechanisms) have been affected. For example, there is evidence that when trying to exploit the bug on yahoo mail service, attackers can get other users passwords.

"Cleaning OpenSSL bugs might take some time" - picture taken from the Martino Sabia gallery

"Cleaning OpenSSL bugs might take some time" - picture taken from the Martino Sabia gallery

Who introduce this bug? (so that we can bury his body, say he is a communist, go to his house and steal his groceries)

As OpenSSL is a free-software with contributors all over the world, anybody can modify the code. Thanks to appropriate tools, we can track who is modifying what part of the code. And thus, know who introduced the bug. It turns out that the code related to the bug was introduced by a German guy (Robin Seggelmann - see the related git commit). Unfortunately, this nationality is not appropriate for suspicious people thinking spying agencies introduced the bug on purpose. And, according to the person that introduced the bug, it was a mistake related when working on bug fixes and new features. In addition, the change was also reviewed by somebody else that also missed the potential flaw.

Of course, since the public declaration, there was plenty of rumors about who really introduced the code, if the original developer was paid to do it, who already exploited the vulnerability, etc. Considering my experience in software engineering and the code reviews I have done so far, such a bug is pretty common in many software and usually not spotted during reviews. This is why code reviews are necessary but not sufficient and you still need to use other methods (static analysis, runtime checking, etc.) for safe and secure coding.

But enough debate, instead of taking part of this discussion, let's stick to the facts.

Why the bug was not fixed before?

The bug was introduced in December 2011 and eventually fixed in April 2014. It was there for about more than 2 years. Within this time frame, anybody that knew about this issue may have exploited it to steal data from services providers using the defective version of OpenSSL.

Finding such a bug requires to review the code, either manually (a coder review the code) or by automated analysis tools or testing. In any case, it requires some efforts which comes at some cost. Problem is: OpenSSL is a free software (as in free speech) and contributors might introduce new code that contains security flaws. Which is just normal: by definition, humans make mistake and when producing code, they sometimes introduce errors (think about the GNUtls bug). Of course, when coding errors might have significant impacts, there are some reviews. But this time, the review was done manually and the reviewer did not catch the bug. Which (again) is normal: most of the time, when reviewing the code, there is no bug and reviewers are not used to make a deep investigation. Which is (again) normal and human - think about a new security clerk that controls people going in and out a building: he will be very careful during his first days, but, after a couple of days, will start to know who is supposed to go in/out and sometimes, make some exception and let you in even if you do not have your badge. This is human: you are used to a routine and less careful. This probably why car accidents are more likely to occur on roads and course you are used to take (for example, when commuting to work).

But let's come back on software analysis: other than manual code review, other techniques can be used to detect such issues: testing, static analysis, etc. It does not seem that OpenSSL has a test procedure that can find or catch such a bug. But users do not seem to put so much efforts on testing this piece of software, despite its criticality and importance. Which is (again) human: why would you want to test something that is used by many other people since several years and free! You just assume that other folks will detect any defect/issue and take the free (as in free beer) software as is!

This was probably the biggest mistake here: users of OpenSSL did not understand that free software is free as in free speech, not free beer. In other words, you can take the code, use it and contribute but you might have additional work/cost if you want to make sure this software is safe and compliant to your own quality standards. Hopefully, some users (as google) have engineers that investigate such piece of code (and eventually discover and fix issues) but the late discovery show that the effort is not sufficient.

Now, the interesting thing is, as this piece of software is particularly critical, other people pay this cost, not for fixing it but exploiting it. They might do the tests, pay the costs for the technology to find the bug and finally exploit it until its public discovery. In other words, others might be willing to pay the cost of testing to retrieve private data. In that particular case, the Return On Investment (ROI) for detecting and exploiting this bug is definitively worth it: institutions can then steal data at (almost) no-cost from users all over the world: it does not require any high processing capacity (instead of trying to brute force an encryption key) or high bandwidth capacity (as for a Denial Of Service attack). You can put a bunch of raspberry-pi ($50 each) and try to steal data on a 24/7 schedule.

Also, this does not mean or demonstrate that Open-Source or Free Software means low quality: a recent study by Coverity shows that software under this license has a better quality than proprietary products. On the other hand, because the code is publicly available, this is more easy to find issues while proprietary software is more difficult to analyze.

Important questions are now: how many critical bugs as this one are still unfixed, how safe is proprietary software since analysis is more difficult to do and what are the best mitigation techniques?

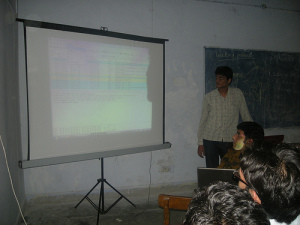

OpenSSL Code Review - Picture under Creative Commons by Sumit Sati

OpenSSL Code Review - Picture under Creative Commons by Sumit Sati

Can the NSA find any pictures of my cat naked using this bug?

Since the Snowden revelations, everybody is nervous about their privacy. We shifted from a behavior where everybody share everything everywhere to a mode where we are suspicious about anything. Conspiracy theories about potential use of the bug have spread over the internet: did spying agencies know what was going on? If yes, did they exploit the bug or not?

Some sources report that NSA was aware of the bug and has been using it for a long time (about 2 years). The official twitter feed from the NSA reports that the agency was not aware of the bug. But after all: does it really matters? If you are using online services such as gmail/facebook/twitter, they probably have more than one way to get access to your data.

On the other hand, other spying agencies and/or company may have used the bug to access private data including private encryption keys. As usual, rumors have spread about exploitation of the bug before the release of the fix but no serious evidence was available so far.

Which companies were affected?

This might be important to know who is really impacted by the bug. What really matters is if the service provider is really affected. The server discloses private information and you never used the bug but other might exploit it to retrieve information you stored using services from various services providers.

Knowing exactly who is really impacted depends on the version of OpenSSL that was used by your service provider. Some services were not impacted at all while other might have sent part of your data without knowing it.

For the impacted services, a timeline has been established by the Sunday Morning Herald. It shows the relation between the bug discovery, how it was disclosed and eventually fixed in popular operating systems. It turns out that few of them were aware quickly, which can be understood: the more people know about the bug, the more potential attacks you can have. So far, as almost 70% of webservers are using OpenSSL, many sites were affected when the bug went public.

How to avoid such situation in the future?

As pointed out earlier in this post, this error is likely due to the manual development process: the developer made a mistake (but who never do one once in a while?) which was not caught by the reviewer (and again, who did not made some mistake when checking something?). But all the development efforts is made manually by two people whereas such issue can be found by other techniques such as:

- Using automated analysis tools. As automated analysis tools are computer program, they (by definition) do not do human mistakes. Also, these programs can be executed on a daily basis and so, use them as the code evolved to discover regression while improving the code. This could be used to detect new issues on code freshly added by developers. Tests such as code coverage, coding guidelines checking, etc... can be automated. The problem? It requires to pay the cost: maintain an infrastructure to execute the tests. having a team to make sure issues are resolved, etc.

- Increase the work force. Have more people to work on the project and review the code. In this case, the code was reviewed by one person but one solution would have to get more reviewers.

- Make independent review. Having independent code review can definitively address this type of issue. As this kind of review is also partly done manually, it may not spot all issues. But this is definitively useful and could be done (for example) at each major milestone/release.

This is list not complete but are likely the usual techniques for finding such issues. Commercial projects use this type of review. So, why not for free software as well? Someone has to pay the cost for it. And, as most OpenSSL users are also competitors, are they willing to pay for a review that can benefit their competitor? As far as I know, there is not such an initiative and review/investigation are not coordinated and made by each company. I might be totally wrong because I am not involved with the biggest users of the software and have no evidence of coordination initiatives) but it seems that having a joint initiative would be useful and each one could take the benefits of it.

Is there any potential other bug like this?

From a statistical point of view, all the software you are currently using for reading this article potentially contain a bunch of bugs. Think about what your machine is currently running:

- an Operating System - the kernel (not the graphical part) is made of almost several M of lines of code. In 2011, Linux was made of more than 15M lines of code (and consider Linux is the kernel for Android phones). This is just the kernel part, we even do not include the graphical part of your system.

- a web-browser - almost 4M lines of code as well (at least for Firefox, probably one of the best browser)

- a compiler used to convert source code into executable binaries - GCC (one of the most popular compiler - the one used for compiling the Linux kernel for example) was made of more than 7M of lines of code in 2012.

According to different sources, the number of bugs per lines of code varies according to various factors (such as the language, experience of the developers, coding rules, etc.). Even if we consider the lowest estimate of 1 bug per 1000 lines of code (and realistic estimates would be more likely 10 to 20 bugs per 1000 lines of code), this is obvious that the software you are currently executing have some flaws and defects. On top of that, add potential developers that may introduce defects on purposes and you can have a good idea of the level of trust you can put in your computer. There is a reason why the NIST institute estimates in 2002 that software errors cost approximately $60B: from a statistical perspective, this is obvious that your computer has bug. The question is: what is their severity and how they can be exploited.

How can I stay safe?

First of all, you can test the servers of your service provider. Second of all, the best thing is common sense and just keep private data ... private. Do not put online data you do not want to disclose. Online services are not safe unless you control what is the underlying software that hosts it. This is a necessary but not sufficient condition (see below).

It sounds ridiculous, old-school but this is just common sense: if you do not want to take the risk to disclose data, do not share! Keep your private information at home, backup on a hard drive and do not send it on google drive, dropbox or other online storage service. Convenience has a price, and, as pointed out since a long time, you might pay with your privacy.

So, what about people having their own self-hosted online service (with their own server running a linux distribution such as FreedomBox)? He is vulnerable despite trying to protect himself by avoiding common online services. Well, because this one is smaller, this might potentially be a target with less interest. Of course, once the bug has been disclosed, many bots will automate any attack and try to get data from any host. A common guideline would be to avoid to use the latest version of a software and stick to established and well-known versions. But this might not be sufficient: even the current Debian stable (wheezy) was exposed. However, this rule might prevent you from future exploits that would eventually be discovered quicker after they are introduced.

The Take-Away

What the average Joe should do to protect himself from potential new security issue:

- Use common sense. DO NOT PUT ONLINE DATA YOU DO NOT WANT TO SHARE. Do not trust online services you do not control.

- Do not to use the same service for everything. In case this service is hacked, is interrupted or experience issues, you can lose data or experience issues if the service is unavailable

- Use free-software as much as you can. Forget the bullshit trends and stick to this rule. Code of free-software is available so that bugs can be discovered and fixed at the earliest. Proprietary applications are more difficult to analyze and finding bugs is more complicated and you have no clue if anybody found it (and if they are eventually fixed). Excited by the latest trendy browser that shows pictures of kitties while the page is loading? You have no clue what this piece of software contains and actually does! Just use firefox, an established browser supported by a large community and that supports standards.