What is the real Cost of Software Complexity?

Recently, I had an interest about the runtime cost of complexity. I am used to situation where people argued they used a bad or poor design, especially in embedded systems, where resources are expensive and thus, scare. For example, why would you cut your programs in different modules when you can have a bunch of functions calling each other? Why using parameters when you can use global variables?

But such design flaws have concrete impacts (poor maintainability, or analysis support) and using it come at a cost: more testing (obviously) but also increases certification costs (more tests to write) and reduce potential components reuse.

While this is difficult to quantify these costs, this is easy to evaluate the resources consumption of well designed software. For example, how much does it costs to avoid the use of a global variable. Captain obvious will tell you that the cost is not significant for memory but there are other costs (processor cycles, context switches, etc.).

So I started to compare the same program implemented using two patterns. The program is a simple producer-consumer system with one component sending a value to another component. There are the difference between both implementations:

- Shared Variable: The producer and consumer are in different tasks and use a global variable to exchange the value

- Isolated Tasks: The producer and consumer are located in different tasks and communicate the value using communication queues

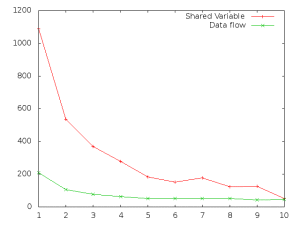

I specified these two implementations in an AADL model, generated the code (with Ocarina) and gather some metrics (with the Linux perf framework). I got the number of context switches for each implementation: using shared variables uses more context switches. As there are the same number of tasks in both implementations, I was thinking I would get a similar value. But not at all.

(x = number of shared variable/data flow ; y = number of context switches)

(x = number of shared variable/data flow ; y = number of context switches)

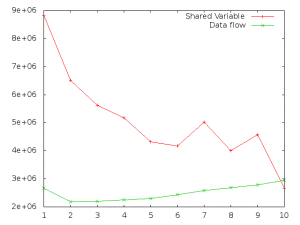

This value is confirmed with the number of instructions for each implementations as well: the shared variable takes then way more instructions than the implementation with data flow.

(x = number of shared variables/data flow in the indices ; y = number of instructions)

(x = number of shared variables/data flow in the indices ; y = number of instructions)

Still very surprised by this result. I also want to make a comparison on the memory performances. But now, looking at this preliminary results, it sounds very weird and build an argument to avoid bad design (such as using global variable vs. encapsulated data with clear and clean interfaces). I will probably provide more details but these first results are motivating to investigate further with different code patterns and variations.